Make

Introduction to project management with make

Getting the content for the pratical

We will explore the wondrous world of make using a number of examples. To get your copy of these examples, from the version control repository, login to your favourite linux machine (perhaps bluecrystal), and type:

svn co https://svn.ggy.bris.ac.uk/subversion-open/make/trunk make

Hello World

Without further ado, let's meet our first example:

cd make/examples/example1

List the files:

ls Makefile hello_world.c

and type:

make

Tada! We've successfully cleared the first and biggest hurdle--automating the (OK modest) compilation task for hello_world.exe. Now try running it:

./hello_world.exe

We can remove unwanted intermediate object files by typing:

make clean

Or clean up the directory completely using:

make spotless

Well, you'll be pleased to know that the worst bit is over--we've used make to compile our program, and even tidied up after ourselves. From here on in, we’ll build on our first makefile as a foundation and just expand the scope of what we can do.

Key Concepts: Rules, Targets and Dependencies

Makefiles are composed of rules and variables. We'll see some variables soon. For now, let’s just look at some rules. A rule in a makefile has the general form:

Target : dependencies

Action1

Action2

...

Note there is a TAB at the start of any Action lines. You need one. Don't ask, don't ask. It’s just the way it is!

A rule can have many dependencies and one or more actions.

A rule will trigger if the dependencies are satisfied and they are ‘newer’ than the target. If the rule is triggered, the actions will be performed. This all sounds rather abstract, so let’s consider something more concrete. Take a look at the contents of Makefile in our example. In particular, the rule:

hello_world.o : hello_world.c

gcc –c hello_world.c –o hello_world.o

So, our target here is hello_world.o, our dependency is hello_world.c and our action is to use gcc to create an object file from the source code. Our dependency is satisfied, since the file hello_world.c exists. The file hello_world.o does not exist. In this case the rule will trigger and compilation action will be performed. If the file hello_world.o did exist, make would compare the date-stamps on the files hello_world.o and hello_world.c. If the source code proved to be newer than object code, the file hello_world.o would be updated using the specified action, i.e. gcc would be invoked again.

We see a similar pattern in the rule:

hello_world.exe : hello_world.o

gcc hello_world.o –o hello_world.exe

Lastly, but not least we have:

all : hello_world.exe

This is a rule with a null action. It's only use is to specify a dependency.

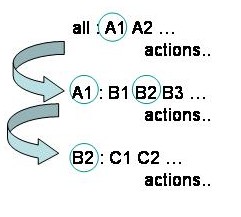

OK, how does make read a makefile?

When calling make in its default mode, it will look for a file called Makefile (or makefile) in the current directory and start reading it from the top down. It will read down until it finds the first rule and look to see if the dependencies are satisfied. If the dependencies are not satisfied, it will look for a rule, with a target matching the missing dependency, and so on. In this way, make chains down a sequence of rules until it finds a satisfied dependency and then will wind back up to its starting point, performing all the specified actions along the way.

For our simple makefile, the 'all' rule is first. The dependency is unsatisfied, but there is a rule with hello_world.exe as a target. The dependency on this second rule (hello_world.o) is also missing, but the dependency on the thrid rule is satisfied (the file hello_world.c exists). The associated action is performed, and hello_world.o is created. Winding back up the chain, the other rules can now also trigger hello_world.exe is created. Hurrah!

Before we leave this example, there are two more rules to look at—the clean and spotless rules. These tidy up any files we created using the makefile. It is good practice, and rather handy, to write these rules. They both have the questionable distinction of being designated PHONY. This indicates that the targets do not refer to a real file. Since these rules are not part of any chain, we must call them explicitly by name to trigger them, i.e. make clean, or make spotless. They have no dependencies and the targets don’t exist, so the actions will always be triggered.

Note that if you type make clean (.o files deleted) and then make again, the source code will be recompiled, despite the presence of a valid executable. Make will ensure that the whole chain of dependencies is intact.

More Files, I Say, More Files!

For the previous example, we had only one source code file to compile--hello_world.c. In the real world, programs grow rather rapidly and we end up keeping the source code in a number of files. More convenient for editing, certainly, but less convenient for compiling. Now we must compile each piece of source code individually and then link all the object files together. If we edit some source, we must keep a track of which files to recompile and, of course, relink. If we forget, we can be left wondering why the changes we just made didn't have the effect we were expecting. Oh boy! It can give you a headache. This is where make really starts to find its wings! Write a short makefile and thereafter you don't have to worry about those things. As you develop your code, just type make and bingo! Make will compile and link all and only what is necessary, and you'll have a fully up-to-date executable every time. Nirvana:)

Let's take a look at the second example:

cd ../example2

In Makefile, you will see that we've begun using variables. For example:

OBJS=main.o math.o file.o

The variable OBJS contains a list of all the object files that will result from the compilation and which we will link together to create the executable. For flexibility we have put the name of the executable into a variable too.

The compilation rules are much as before, except we have one for each source code file. We can make good use of our OBJS variable in the linking and clean-up rules. For example, we have used:

$(EXE): $(OBJS)

gcc $(OBJS) -o $(EXE)

instead of:

myprog.exe: main.o math.o file.o

gcc main.o math.o file.o -o myprog.exe

In doing so, we have far less repetition and the rule will stand no matter how many object files we have and what we call the executable.

Let's try it out now. Type make, followed by ./myprog.exe. Look at the chaining from the all rule in the makefile and notice the order of events reported by make. We can update the time-stamp on one of the source code files by typing:

touch math.c

Type make again now and see which files and recompiled and relinked. As before, we can use make clean and make spotless to tidy up. Lovely jubbly!

Weird and Wonderful Characters

Now, you will no doubt have spotted some patterns in the previous makefile. For example, the actions to compile the source code files were essentially the same for each of the files. Through the use of some wonderfully esoteric character sequences, we can streamline our makefile a good deal. We'll learn to love these ugly ducklings. Let's see how:

cd ../example3

Looking at the makefile, we see can see some funky character sequences. Working down from the top, we see that the variable assignments are unchanged from last time. We do see a change in the all rule, however:

$(EXE): $(OBJS)

gcc $^ -o $@

The target and dependencies are fine, but what's happened to the action part? We've made use of some automatic variables in the shape of $^ and $@. These are rather useful and we'll start to see automatic variables cropping up quite often. $^ takes the value of all the dependencies of a rule and $@ takes the value of the target. They are used in the next rule, and allow us to make it rather general purpose; hugely reducing the need for makefile maintenance, should our build change. Let's see how. The second rule:

$(OBJS): %.o : %.c defs.h

gcc -c $< -o $@

What on earth is that?!. I know. I said they were queer fish, but it's OK, don't panic. This is the format for a static pattern rule. You'll love these pups. Honest. Let's calmly walk through the rule.

We have an extra colon on the top line. Before the first colon is a list of targets to which the rule will apply. It will apply to each target in the list in turn. We have used the list of object files--main.o, math.o and file.o. So, the first target will be main.o, the second will be math.o and so on. Next--between the first and second colon--is the target-pattern. This is matched against each target in turn so as to extract part of the target name, called the stem. The stem is used to create dependency-pattern corresponding to each target. We can summarise the general form of a static pattern rule as:

targets.. : target-pattern : dependency-pattern(s) other-deps.. actions

For our particular rule, the first target is main.o. The target-pattern %.o is matched to this, giving a stem of main. The dependency-pattern, %.c, becomes main.c, as a consequence. Neat! Given some automatic variables, we can now write a single rule which will compile all our source code files. The variable $< has the value of the first dependency only.

Let's see how all this works in practice. Type make, and pay attention to each instantiation of the rule as it is echoed to the screen.

Fortran90 Modules: Ordering and Dependencies

Fortran90 is a popular language for scientific programming and for that reason alone, we should include an example makefile which is used to compile and link it. The use of modules in Fortran90 gives us reason to consider the order in which the source code files are compiled and also the dependencies between them. Let's take a look:

cd ../example8

You will see some Fortran90 files:

constants.f90 main.f90 utils.f90

and some makefiles:

first.mak second.mak third.mak

Note that I have included a symbolic link also:

Makefile -> third.mak

OK, enough of the listings, let's try the first makefile:

make -f first.mak

Using the gfortran compiler, I get the following error message:

gfortran -c utils.f90 -o utils.o

utils.f90:12.27:

use constants, only: pi

1

Fatal Error: Can't open module file 'constants.mod' for reading at (1): No such file or directory

make: *** [utils.o] Error 1

If we take a look inside first.mak:

OBJS=utils.o constants.o main.o

we see that utils.o is the first in the list of object files and so utils.f90 will be the first to be compiled--the ordering in lists in makefiles is important. However, the routines in utils.f90 make use of the pi, included from the constants module, e.g.:

real function surface_area_sphere(radius)

use constants, only: pi

implicit none

real,intent(in) :: radius

surface_area_sphere = 4.0 * pi * radius**2.0

end function surface_area_sphere

and so the fortran compiler will look for a file called constants.mod--produced as a side-effect of compiling constants.f90--when it is compiling utils.f90. In this case, the .mod file for the required Fortran90 module was not found and we got an error as a result. In order to correct this, we need to adjust the order of compilation in the makefile. Take a look at the OBJS variable in second.mak and try to compile again:

OBJS=constants.o utils.o main.o

make -f second.mak

This time we get a clean compilation, in the right order:

gfortran -c constants.f90 -o constants.o gfortran -c utils.f90 -o utils.o gfortran -c main.f90 -o main.o gfortran -o myprog.exe constants.o utils.o main.o

and an run the executable program, myprog.exe:

Given a radius of (km): 6357.000 The surface area of the earth is (km^2): 5.0782483E+08 and has a volume of (km^3): 1.0760809E+12

"Great!" we say, "we've cracked it!" Well yes, we can now compile correctly from a clean start. However, recall that one of the great benefits of make is that it can determine what parts of a program need to be recompiled if we have made a change to the source code. That is a real bonus. Let's see if our makefile can determine what work needs to be done. Let's refresh the date stamp on constants.f90, simulating a code change:

touch constants.f90

and re-make:

make -f second.mak

The result is:

gfortran -c constants.f90 -o constants.o gfortran -o myprog.exe constants.o utils.o main.o

constants.o is re-made and so is the executable. We're missing something, however. Since utils.f90 contains the use constants statement, a change to constants.f90 is very likely effect the behaviour of the routines and so utils.o should also be re-made. The reason it was not re-made is because we have a missing dependency. We need to include some more information in our makefile. Take a look at third.mak. We have a new rule:

utils.o : utils.f90 constants.f90 main.o : main.f90 utils.f90

When make chains through the rules, starting with:

all: $(EXE)

It will examine all rules and their dependencies. It will find two rules with the target utils.o--both equally valid. The rule we have just added extends the dependencies to include both utils.f90 and constants.f90. If either of these dependencies are newer than utils.o, all pertaining actions will be triggered and the file re-made. Note our new rule is 'empty'. This is because we have all the actions we need (i.e. to recompile utils.f90) already included in the static pattern rule.

Some compilers will allow the creation of such dependency rules on-the-fly. You can use the -M option with both gcc (for C files) and g95 (for Fortran) to obtain dependcy rules, which you can re-direct to a file and subsequently include in your makefile--see section Going_Further_and_the_Wider_Context. gfortran will follow suit in an upcoming release.

This third makefile now contains all that we need. It has a symbolic link to Makefile, so we can re-touch constants.f90, type make and all will be re-made correctly.

If, Then and Else

Next up are conditionals. Sometimes its useful to say if this, do that, otherwise, do something else and makefiles are no exception. For example, you may want to compile your program under both Windows and Linux. It's quite likely that your compile commands will be a little different in this case. Using conditionals, you can write a makefile which you can use for both operating systems. Let's take a look our next makefile:

cd ../example4

In the makefile we have a conditional based upon the value of the variable ARCH:

# A conditional based on the value of the variable 'ARCH'

ifeq ($(ARCH),Win32)

CC=ccWin32

OBJ_EXT=obj

else

CC=gcc

OBJ_EXT=o

endif

Inside the conditional, we set the values of the variables CC (our C compiler name) and OBJ_EXT according to whether we are building on Windows or Linux. We can make use of the values of these variables in other assignments, e.g.:

OBJS=main.$(OBJ_EXT) math.$(OBJ_EXT) file.$(OBJ_EXT)

where we will get object files named main.obj etc. on Windows and main.o etc. on Linux. We can also embed conditionals in rules. For example, our compilation rule this time looks like:

$(OBJS): %.$(OBJ_EXT) : %.c defs.h

ifeq ($(ARCH),Linux)

$(CC) -c $< -o $@

else

@echo "This is what would happen on Windows"

@echo $(CC) -c $< -o $@

echo "Note what happens to an echo without the @"

endif

where we can choose the compiler, perhaps compiler flags etc. etc. based on the architecture we're using. Since we're not using Windows, I've added just echoed the action for Win32. Try setting ARCH to both Linux and Win32 and watch the results. Note the silencing effect of a leading @ on an action line.

If Your Makefiles Get Large--Split 'em Up!

We noted earlier that as a project grows we inevitably end up splitting the source code over a number of files. This can also be true for your makefiles. Make provides a simple mechanism for doing this. Using the include statement, you can embed the contents of one makefile inside another. In this way we can keep the size of any individual makefile to manageable proportions. One word of caution, however. You'll remember that make reads down from the top of a makefile and will try to execute the first rule it encounters by default. Since make takes the contents of one makefile and drops it directly into another, we must be careful about the order in which we include things. We could accidentally include a rule from another makefile before our intended default rule-conventionally the one named all. Let's take a look at our next example:

cd ../example5

In this case, we have an include statement right at the top of the makefile:

include macros.mak

Inside macros.mak, we have only variable assignments and so we are safe to include it at the top of the main makefile:

OBJS=main.o math.o file.o

EXE=myprog.exe

We have another include statement at the end of the file:

include clean.mak

We have grouped all the rules relating to the tidy-up into clean.mak:

.PHONY: clean spotless

clean:

\rm -f $(OBJS)

spotless:

\rm -f $(OBJS) $(EXE)

but since we included it after the first (all) rule, we are safe to do this. Notice that clean.mak contains references to variables only defined in macros.mak. Since both macros.mak and clean.mak are effectively cut and pasted into the file Makefile, make reports no errors and everything proceeds smoothly.

In previous examples, we have called make with all the defaults, i.e. it will look for a file called Makefile and try to execute the first rule (all) by default. We've also seen how we can invoke a specific target, such as calling make clean. We can also pass a non-default makefile to make. For example we could type:

make -f clean.mak clean

or indeed,

make -f clean.mak

In this case, we are invoking the clean rule directly from the fragment of makefile in clean.mak (by virtue of it being the first rule in the latter case). From the output, you can see that the variables OBJS and EXE are empty in this case and that the rules only make sense when embedded in the composite.

Rallying Other Makefiles to Your Cause

In the previous example we looked at breaking up our makefiles into smaller, more manageable parts, as our projects grow. The include statement is useful for makefiles in the same directory, but sometimes it's convenient to split our projects over several directories, what then? In this case you can invoke a sub-make process from inside the first makefile, i.e. call make--perhaps after moving to a different directory--as part of the actions of a rule. In this way, we can cascade down to tree of directories, calling make appropriately as we go. I have found this approach to be useful, but it would be amiss of me not to point out a paper which was written to highlight some of the dangers of this approach: ["Recursive Make Considered Harmful"]

OK, Let's look our next example:

cd ../example6

In this makefile, we see a healthy swathe of variables at the top of the file, including a set pointing to a subdirectory and the name of a library of routines that we wish to create inside:

LIBDIR=mylibdir

LIBNAME=libfuncs.a

LIBFULLNAME=$(LIBDIR)/$(LIBNAME)

It is very convenient to package up the files for a third party library--or one of our own--in such a subdirectory. We will build the library in that directory (mylibdir in this case) as part of the top-level build and link to it accordingly.

Inside main.c we include the library header file and so we augment the include path accordingly in the compilation rule:

$(OBJS): %.o : %.c defs.h $(LIBDIR)/func.h

gcc -I$(LIBDIR) -c $< -o $@

The link rule has a dependency upon the compiled library:

$(EXE): $(OBJS) $(LIBFULLNAME)

gcc $^ -o $@

and we have a matching target for the rule which will call our sub-make:

$(LIBFULLNAME):

\cd $(LIBDIR); $(MAKE) $(LIBNAME)

We have an automatic variable called $(MAKE) available, which takes the value of the make command we used to invoke the top-level process. Typically this is simply make, but the variable is useful if, for instance, GNU make is installed on your system as gmake. Note that we are explicitly calling a target in the sub-makefile with the value of $(LIBNAME). This is not strictly necessary, but can aid clarity, and we must know the name of the library at link-time in any case.

The clean-up rules also use a recursive call, to request clean-up in the library sub-directory. For example:

clean:

\rm -f $(OBJS)

\cd $(LIBDIR); $(MAKE) clean

The makefile in mylibdir is familiar and largely autonomous:

OBJS=func.o

all: $(LIBNAME)

$(LIBNAME): $(OBJS)

ar rcvs $@ $^

$(OBJS) : %.o : %.c func.h

gcc $(CPPFLAGS) -c $< -o $@

.PHONY: clean spotless

clean:

\rm -f $(OBJS)

spotless:

\rm -f $(OBJS) $(LIBNAME)

We compile the object files and then create an archive of them in the $(LIBNAME) rule.

I say largely autonomous. Indeed, we could arrange for our sub-makefile to be independent of anything else. In this case, however, I have made use of the variable $(CPPFLAGS). This is not defined in the sub-makefile and so would be empty were it not for the export statement in the top-level makefile. This has the effect of exporting the value of all variables to any sub-make files. Try changing the value of $(CPPFLAGS) and see the effect upon the overall program.

Not Just Compilation

So far, we have looked at makefiles solely concerned with building libraries and executables. Make is very flexible, however and compiling source code is not the only task we can perform with it. If you are familiar with the typesetting language LaTeX, for example, you can write a makefile to create your presentation quality document from your set of typesetting source files. Then, as you work on your document, it is a simple matter of typing make to obtain the latest version of your PDF or Postscript file.

In the next example, we will look at 3 tasks which go hand-in-hand with, but are distinct from, code development:

cd ../example7

If you use the Emacs editor, you can use a program called etags to index your code. Given this index, Emacs can jump you to the definition of a function, perhaps in a different source code file, with the click of a mouse. Very handy! To create the index we have a rule called tags:

tags:

@ if [ -f TAGS ] ; then \rm TAGS ; fi

@ $(SHELL) -ec 'which etags > /dev/null; \

etags -a -o TAGS *.c *.h'

To try it out. Type make tags and then open up main.c, say, in Emacs. Move the cursor to a function name and use M-. ("meta-dot") to jump to the definition of that function.

A crucial part of any software development process is testing. In a similar vein, we can write a rule which automates code testing. This can be a huge boon to a project as it makes testing very easy indeed and so encourages developers to test often-a proven recipe for success and efficiency in software development. Since the actions attached to a rule are arbitrary commands, we can bundle them up into a shell script, if we like and simply call the shell script in the rule:

test: $(EXE)

test.sh

Inside the script, we could setup our model, run it and compare the outputs to a set of outputs we know to be correct. Put simply, test that the program is doing what it is supposed to be doing. The sky is really the limit here, as we can make our shell script as complex as we like. For example, I created a testing suite for a climate model in this way.

Try make test now and note the dependency on the executable. If it is not built (throw in a make spotless), the test rule will force compilation first, and then test the results. Things get quite exciting when you keep your source code inside a version control repository, such as that provided by [Subversion]. It is a simple matter to write a cron job to first get a copy of the latest version of the code and then to call make test. In this way, you can automatically build and test your project every night. Computers love to do these routine and sometimes mundane tasks! Nightly build and test suites are particularly useful for collaborative projects and significantly reduce debugging time, since recently created bugs are easier to find and fix.

Automatic documentation tools take appropriately formatted comments from your source code and turn them into professional quality project manuals. It is well established that the best way to keep documentation up-to-date (or let's be honest, written at all!) is to include it in the same file as the source code and to create it when you build the project. Two examples of automatic documentation tools are [doxygen] and [naturaldocs]. You could make use of these benefits using a make doc rule.

Indeed, if you use a Subversion, you can arrange for test and documentation rules to be invoked each time a change is made to the source code repository. We can see then, that the combination of a few relatively simple code development tools can yield very significant benefits--enjoy!

Going Further and the Wider Context

The Pragmatic Programming course continues with a practical about debugging techniques: debugging.

The GNU make manual provides very useful reference and examples, once you have the basics under your belt. One such useful recipe is the automatic creation of dependency rules for source code files which make use of other files, such as header files in C, or common blocks or modules in Fortran (pp 37-39).

As the sophistication of your makefiles grows, you will find a large set of automatic variables and string manipulation tools listed in the manual to be very handy indeed.

Other sources of further reading include "GNU Make" by O'Reilly.

Make is not the only build tool out there and it would be remiss of me not to mention some of the others. Do not dismay about the foregoing examples. The concepts and knowledge will stand you in good stead whichever build tool you adopt.

- Apache Ant: This shares many of the concepts and functionality of make, but makes use of XML for its rules file.

- Autotools: These tools are designed to assist in the (automatic) creation of makefiles for portability across Unix-like platforms.

- CMake: This is another automatic portability tool which creates makfiles on-the-fly from higher level configuration files.

A word of warning: While tools such as the autotools and cmake provide the potential to improve the portability of your build system, they can also increase complexity and the dependence upon further software. Think carefully about what will serve your project's needs best--a makefile which can be edited by those with the skills taught above, or a potentially much more complex setup. Remember that if the autotools or cmake does not produce the desired results, they will be harder to debug.